Project and Team Content

I have worked with some ABBYY analysts who decided to focus on application and web version of learning in blended format for the second largest language school in Russia. I used to go to the first big school, so I was absolutely sure that we were doing a good job.

All text work were made in Jira / Atlassian and Google Docs, schemas in draw.io and wireframes and user flows in the Figma.

Analyzing

Based on done decomposition for website, results of marketing and UX studies, I made a decomposition of functions for mobile application. I was also responsible for role-function table (known as RBAC), because each function could be open or not for each roles in the system. For example, a teacher could not add new content blocks with exercises or learning information, but a content editor could.

Design Part

The requirements were partly nested from the web service part, which I also covered a bit, and partly written specifically for the mobile application. All requirements consisted of possible operations on the database table, table properties and their type in the database, and use cases related to that requirement. This level of abstraction and connection helped to minimize communication with developers, analysts and designers (if the whole team could read and understand the documentation).

Finally, all descriptions and requirements were linked in the documentation. Each use case should follow a requirement and appear in different visuals (screens or modal views). And thanks to the function and role decomposition, everyone could find out which visuals are available for this or that role.

Despite the fact that I worked for mobile wireframes I also have to write about other communication channels (PUSH notifications or SMS when it’s touched by my part of the use cases, for example to declare a trigger and format: “Checked Quest %mark% for %Quest_Name%”).

Structure

The first step was to create a hierarchy of screens. Yes, I had function decompositions, use cases and requirements, but I shouldn’t transfer their structure to the screen structure because it’s not a user’s problem how we organize our database or documentation.

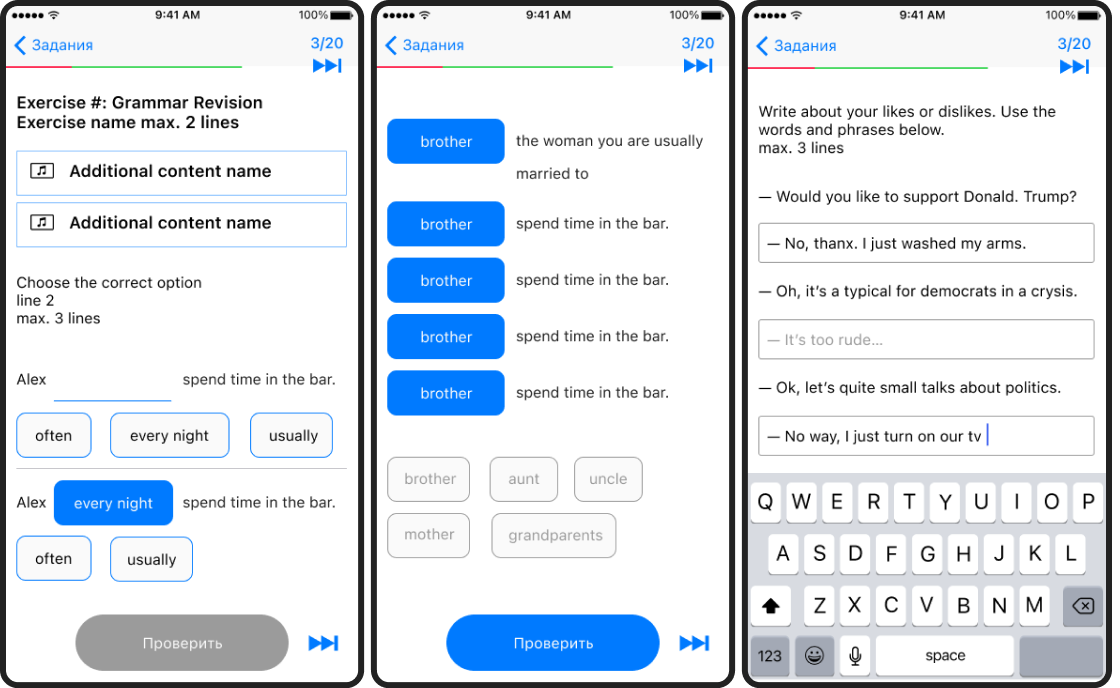

In addition to the standard authorization functions, the user could access the start screen, the profile and the learning process. The learning process consists of materials (video or text with different embedded elements such as audio, image, table) and quizzes of different types. I created requirements and design for 10 types of quizzes: spell check, check mistakes, type text, type essay or dialog lines, drag from list to text, picture, table, etc.

Mapping

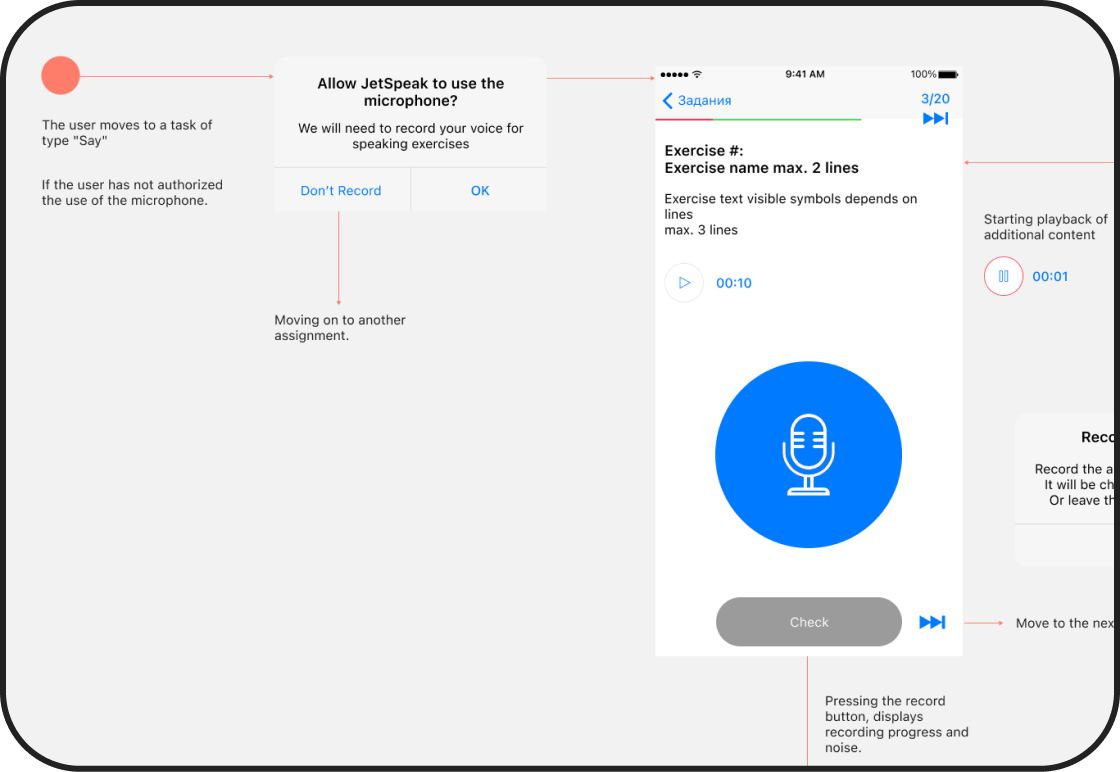

Besides each screen having a schema in draw.io, I also created a visual map aka user flow for each task in Jira to show how the screens are connected. User flows were made on the basis of use cases and after all wireframes for the flow were created. On the screenshot is a fragment of the beginning quiz where users have to train speaking skills and record audios according to the tasks.

Quests and Questions

For every quest I have to create solved state, when user did everything good, and wrong solved state with two options: show after the first try the whole right answers or showing only right made part for the second chance. Options were chosen depends from the task and language level of the student by methodological department. Also depending from the levels students worked with pictures, short or long texts.

On the screenshots the first two screens show the same type of quiz, but in different versions. On the first screen after seeing additional materials user should drag from the list of words (or it could be sentences) to the gaps in the text (n-1, numerous of options corresponding to each line). Second screen is also drag and drop task, but all words are should be distributed among all sentences. Third screen is completely different, the user should create a dialog and fill the gaps in the speech with text input.